Yesterday was a beautiful day in northern Indiana. It was sunny, unseasonably warm, and the snow has almost completely melted from my yard. It was the kind of day that makes a person start to consider putting away the snowblower for the year and mentally plan garden plots. I actually started to put my snowblower in its summer home when I realized…I’d been got.

Friends, it’s Fool’s Spring.

What is Fool’s Spring? It’s that first warm day when Mother Nature fakes you out and makes you think winter is over. Also half of Midwest social media has some version of this meme posted:

I don’t know of it’s a symptom of climate change but I don’t remember this happening as a kid. Of course, the winters weren’t terribly bad where I grew up. I mostly remember them being grey and the pastures being ungodly muddy. But up here in Northern Indiana? Oh, you feel winter. It’s dark and cold from November and the threat of snow doesnt leave until May.

(My undergrad anthropology advisor specialized in indigenous people of the arctic. I remember him telling me that interpersonal relationships were prioritized because at some point in the winter you freak out and want to run naked into the cold darkness and you need people you can trust to hold you down until the madness passes. I didn’t understand that fully until I moved up here.)

But anyway, for the past few months I’ve been trying to find a way to visually represent the adoption cycle of LLMs/Gen AI since the traditional graphs aren’t quite right and this felt like an alternative model.

I’ll explain more, but first let’s travel back in time…

Picture it, late winter/early spring 1997. My parents, sister, and I were at Magee’s Diner in Hillsboro, Ohio. I was likely having my usual of a cheeseburger with extra pickles, a cup of vegetable soup, and a slice of either peanut butter or sugar pie for dessert. In the booth behind us was a group of older gentlemen. One of them, who sounded pretty much exactly like Boomhauer on King of the Hill, announced “I got me a computer and I get on that Internet, that dubya dubya dubya and I can just read what I want and talk to people all over the world. You got to get one.” He went on to discuss the various message boards and Usenet groups he was on. I caught my sister’s eye to confirm she was eavesdropping like I was. After the other group left, I said “I’m sorry, but are we in an IBM commercial? What was THAT?”

Actually we kind of were because it finally convinced my mom to get a computer and internet connection after my sister and I strongly suggested it for months.

Now flash forward to today. I can’t find the post to screenshot it, but a high school classmate - and remember, I grew up in a town of 900 people in southern Ohio on the edge of Appalachia and this person still lives in the area - posted on Facebook this morning how much they love and use Anthropic’s Claude. And it took me right back to Magee’s 30 years ago.

While these two unprompted ads for technology are very similar, there is one main difference, one that I’ve been trying to capture for a few months now. For the gentleman in Magees, depending on how you want to look at it, person to person interaction via computer networks had been going on for years if not decades by the time ol’ Bubba there got online. And on the other side, we are 3 years, 2 months, and 17 days since Open AI announced Chat GPT. I don’t want to say adoption - because is it really tech adoption when you don’t have a choice whether or not to use it? - but the exposure of Gen AI to regular people is so much faster than anything in recent memory.

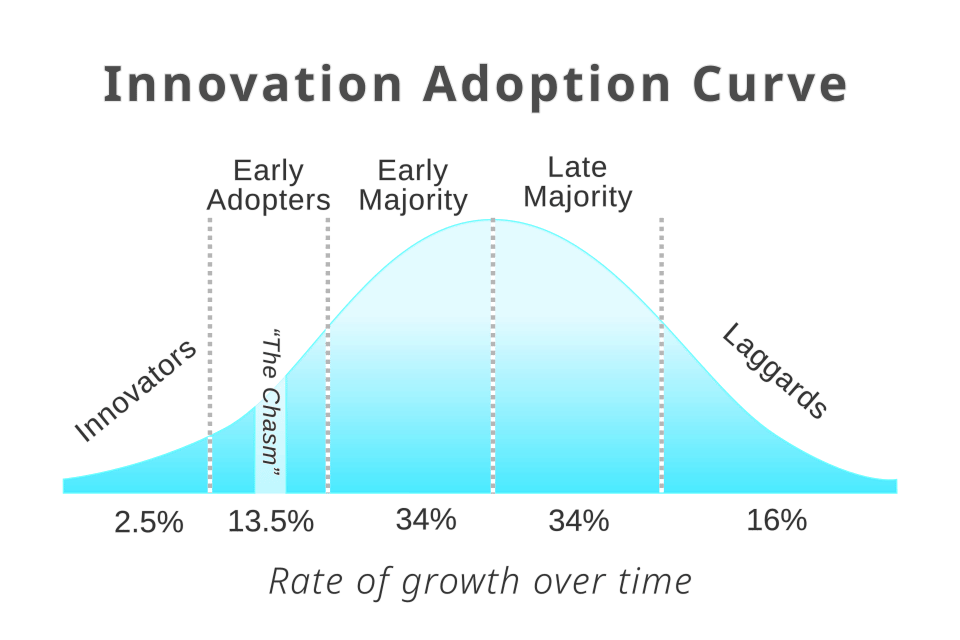

There are two similar looking charts that get brought out when we talk about technology adoption. If you’re reading this, I’m sure you’ve seen them. The first is the Roger’s Bell Curve or the Technology Adoption Life Cycle or Diffusion of Innovation.

I always find it almost funny how subjective this is. Personally, I consider myself Early Majority, maaaaaybe on edge of Early Adopter. However, compared to most in law- or libraryland, I’m practically Steve Jobs. But on the other hand, most people working in the Silicon Valley would count me as basically Amish.

I mean, I don’t even like to use ATMs and I will walk before ever getting into a Waymo so they’re not that wrong.

Subjective or no, the point still stands that generally speaking societies ease into tech adoption, with a few brave or hearty (or perhaps foolish) souls venturing into the unknown and trying out new tools and working out the kinks for the rest of us. Which brings us to the other famous (or perhaps infamous) chart that is frequently trotted out…the Gartner Hype Cycle.

I’ve always assumed that as each segment of the Roger’s Bell Curve entered the fray and began to wrestle with adopting a new technology, they each started their own ride on the Gartner Hype Cycle Roller Coaster. And thus, when the Early Majority were in the Trough of Disillusionment, the Innovators had seen their way to productivity and could guide the later starters there.

But now…there was no gentle easing into the post GenAI world. I always analogize GenAI revolution to Web 2.0 because it took tech that was generally only available to the most geeky or privileged and made it accessible to anyone with a computer connection. But even then it was an unevenly distributed future and initially was first used and tested by the more adventurous and tech savvy - which is why we have to call them “blogs” instead of something cool- and eventually trickled down to more widespread use.

And while it did cross “Places”, and maybe became a place of its own, it was still easy enough to avoid. You didn’t HAVE to read a company’s blog or ONLY interact with a cultural institution’s collection on Flickr or ONLY get education on YouTube or from Wikipedia. And you certainly didn’t have to do your job on Facebook.

Over the past three years, there has been a hard launch of Gen AI into every tech interface, be it consumer, enterprise, educational, or what have you. It fundamentally changes workflows as well as our relationship with the technology, going from something that is an extension of self to a collaborator to be managed or…something to be obeyed.

(I don’t want to get into it now because this post is long enough and it’s my day off, but sometime we should sit down and talk about Jacques Ellul and the disturbing and weird view of labor many in tech have and related things.)

(Also one of these days I swear I’m going to fuck around and create a History and Philosophy of Science course focused on legal tech.)

We have all been forced to be Innovators, whether we like it or not. Maybe it’s a sign of getting older, but the number I of times I find myself saying in reaction to so many things lately “why is everyone in such a dang gone rush all the time? Slow down and wait before making a decision.” is A LOT.

Late Majority and Laggards are encountering problems and hurdles without a clear path forward and meanwhile, traditional innovators and early adopters are like

But is it?

I am generally optimistic about the future usefulness of GenAI/LLMs, while also accepting that it’s probably not going to be the best solution for every use case that it’s currently being applied to. I think there will be ebbs and flows in the trust and usefulness in these tools. Maybe we are in a Fool’s Spring where many of the loudest and most well funded voices are unrealistically optimistic about how we’ll eventually settle on using this technology. I just hope that the hard push to integrate Gen AI into every product and audience before they or it was ready doesn’t send us into another full blown AI winter.

be well,

Sarah